Tokenization

Before an AI model can process anything, it breaks your input into tokens which are small chunks like words, parts of words, or even just characters.

Most AI tools charge you per token for both your input and the model’s response. Yes, every word (or chunk of a word) counts.

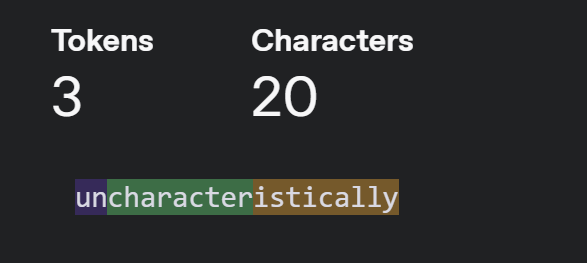

A word like "hello" is usually one token. But something longer like "uncharacteristically" breaks into 3 tokens.

It adds up fast when you're working with big inputs.

Language Differences

English is pretty efficient. One word often maps to one token. But if you're working with Chinese, Japanese, or Arabic, the same message could take 2–3 times more tokens.

Since most tokenizers are trained from English-heavy data, they tend to slice unfamiliar characters into more pieces to make sense of them.

If you're building for global users, costs might surprise you. A message in Chinese could use twice the tokens as the same thing in English.

And if you're paying per token, your language choice can affect how fast you burn through a free tier or monthly cap.

Try OpenAI's tokenizer tool. Paste the same sentence in different languages. You’ll see how the token count shifts and why some translations cost more.

You can also plug in something fun like “supercalifragilisticexpialidocious,” and see how it splits.

Text = Tokens = Money

You might think you're sending a short message, but models don’t count words. They count tokens. That’s how tokens sneak up on you. A long variable name here, a markdown table there… it adds up.

Once you see how text gets chopped up, it’s easier to figure out what’s driving the cost.

Until next time.

Resources: